We would like to thank Haosen Xing for the careful review of the manuscript and the help throughout the project. We thank Kavisha Vidanapathirana for the initial implementation of NSFP++.

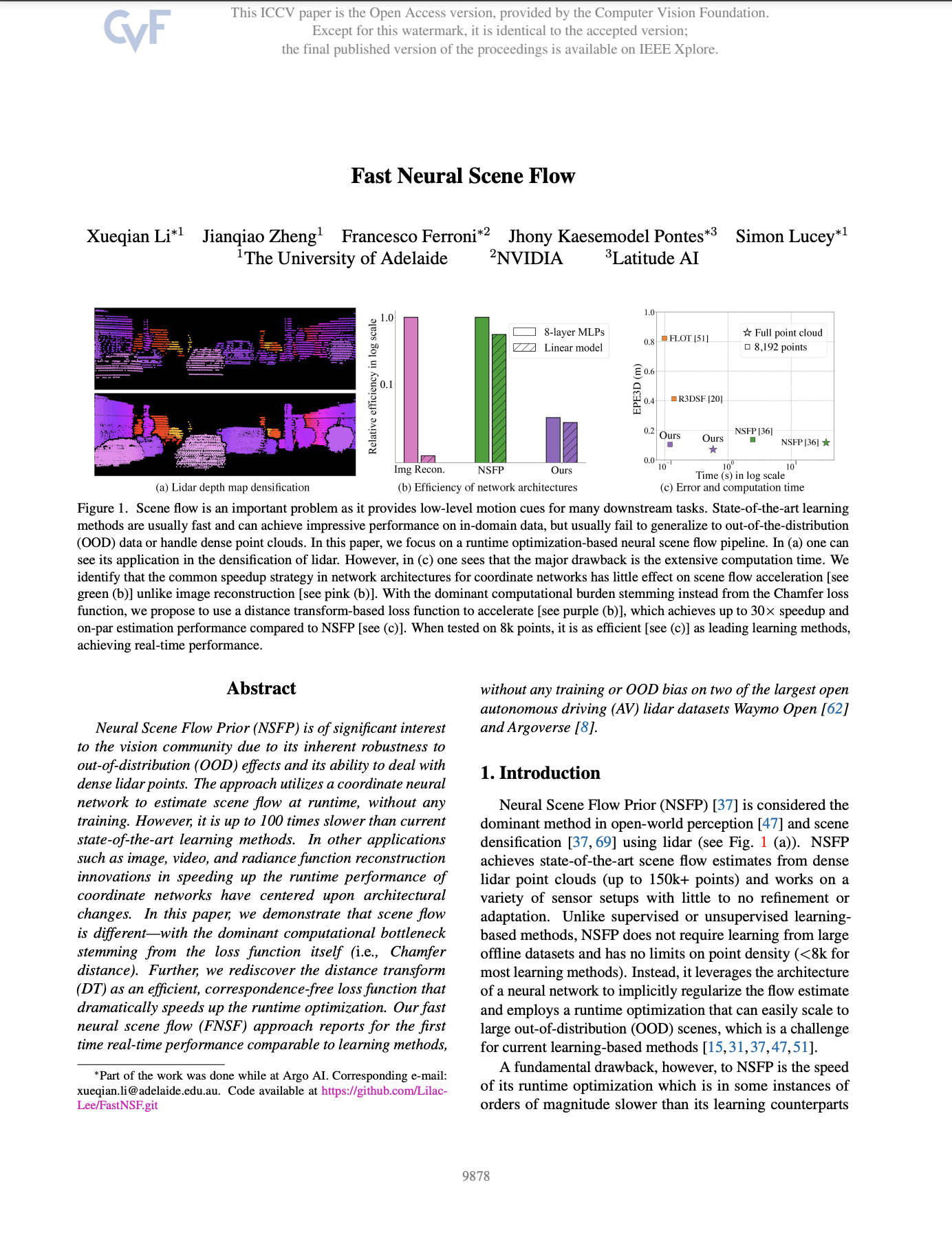

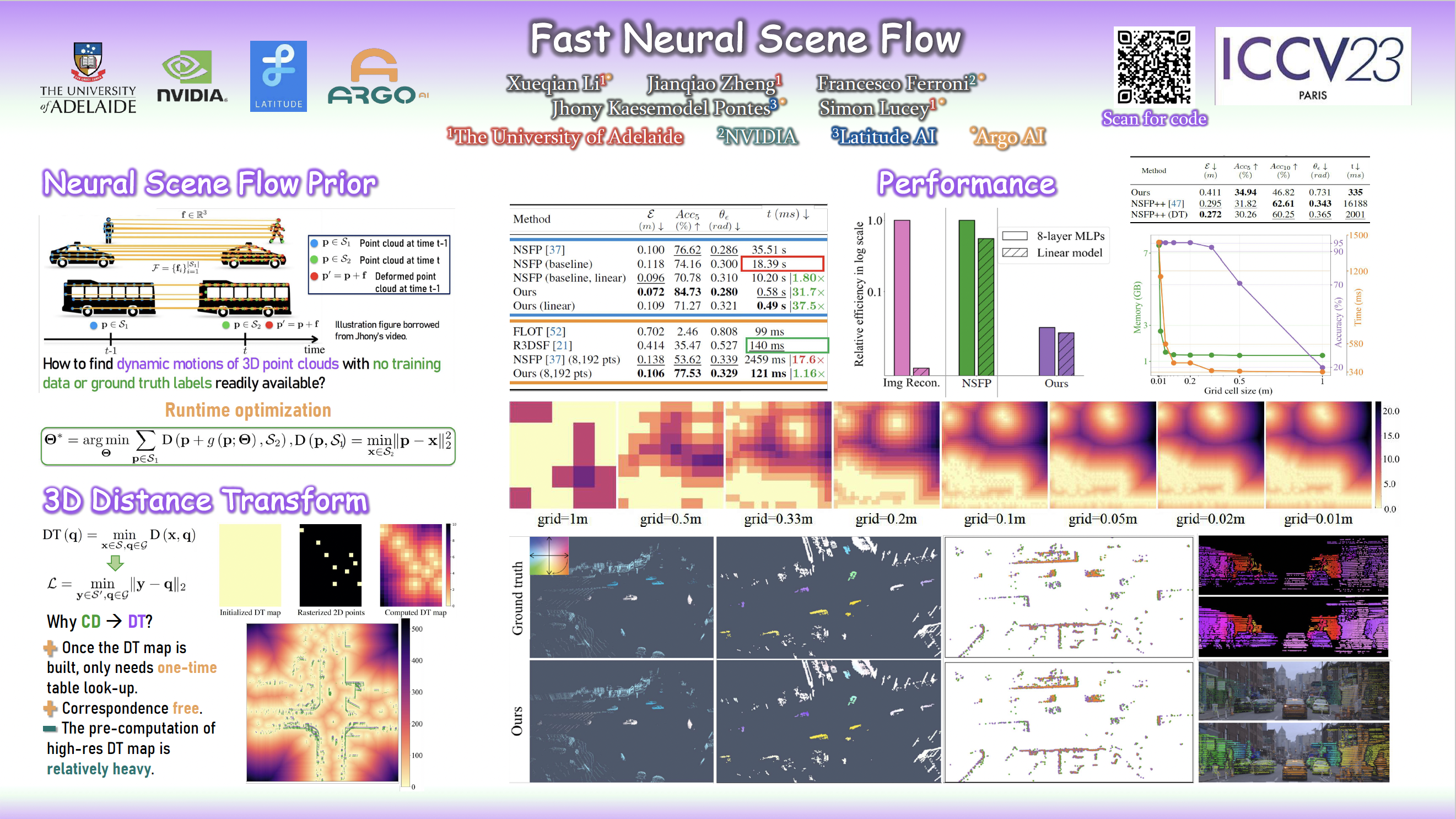

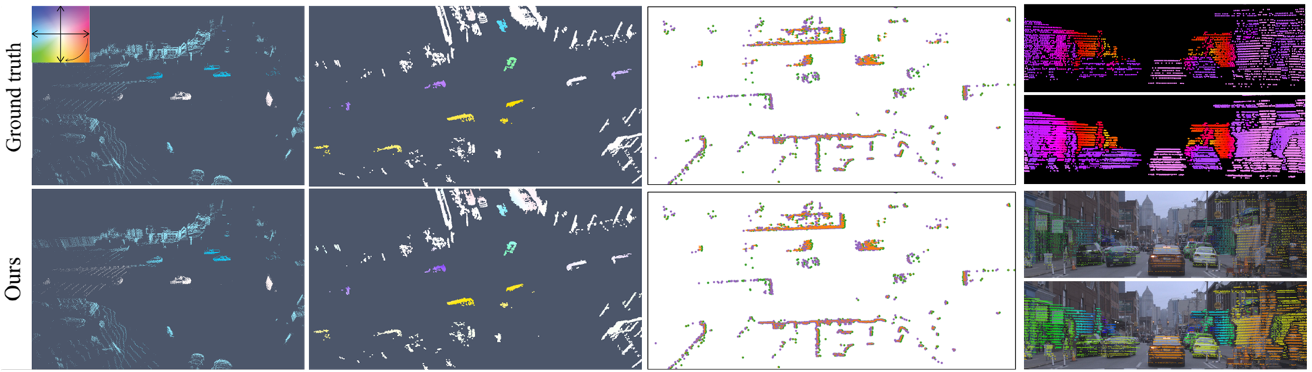

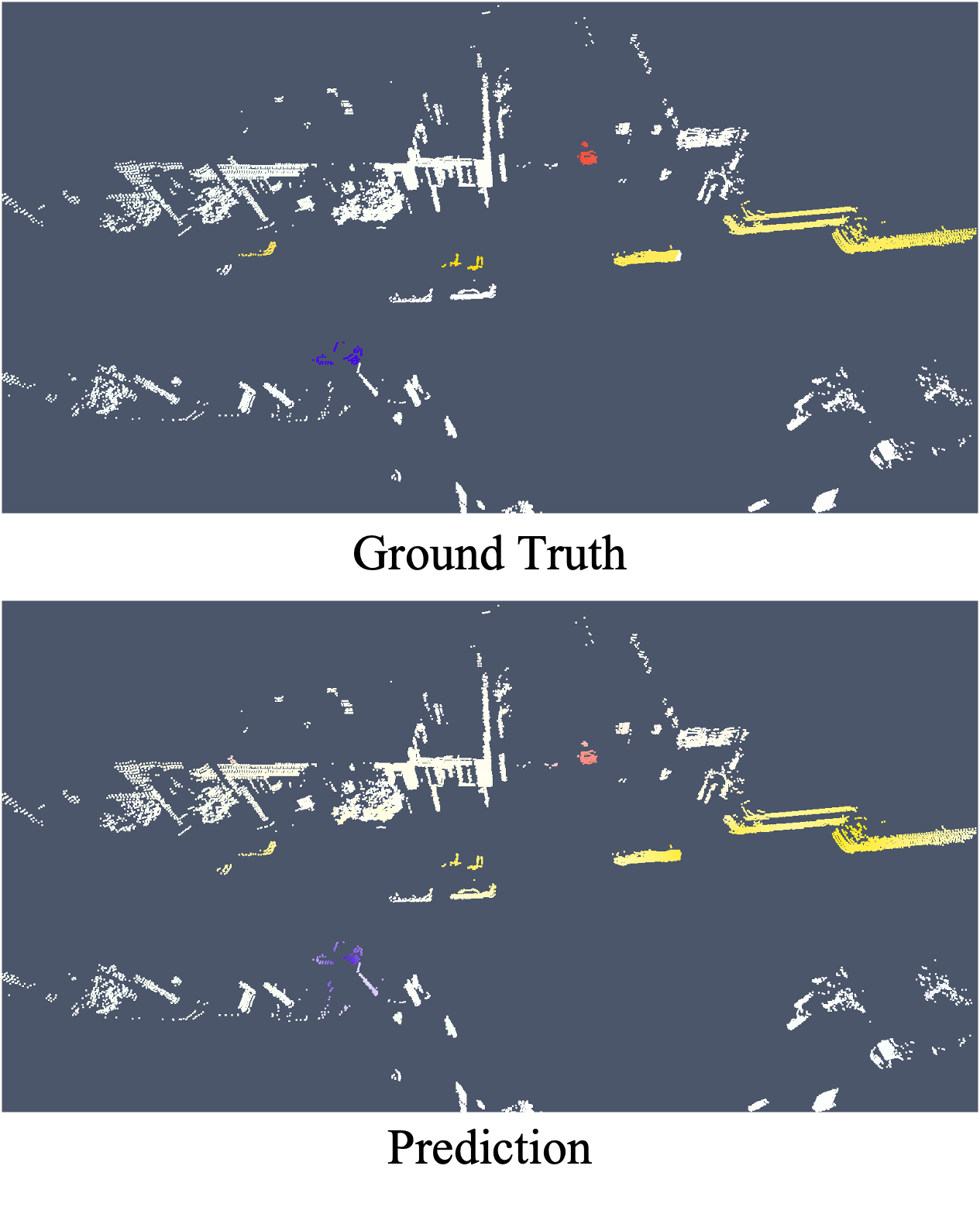

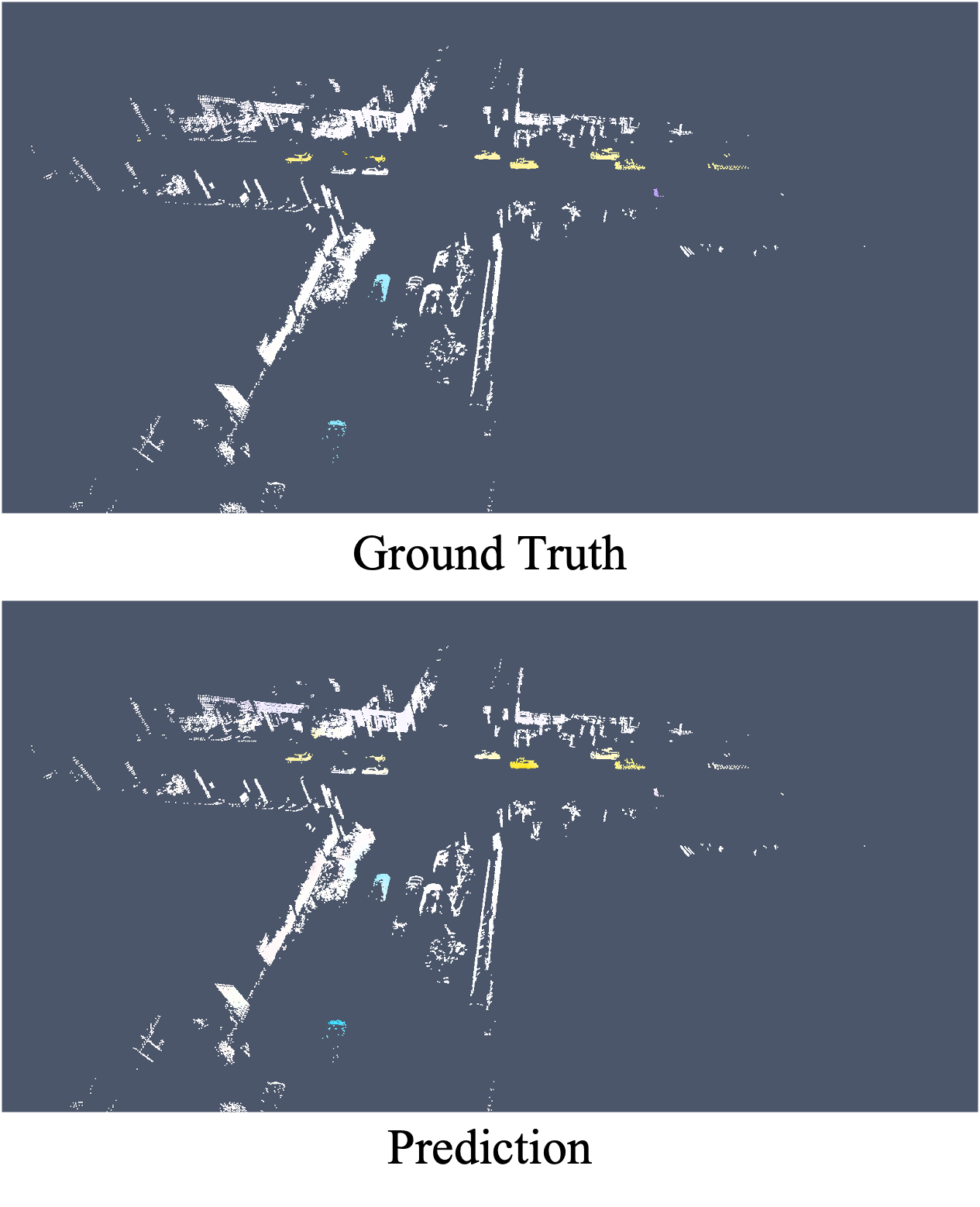

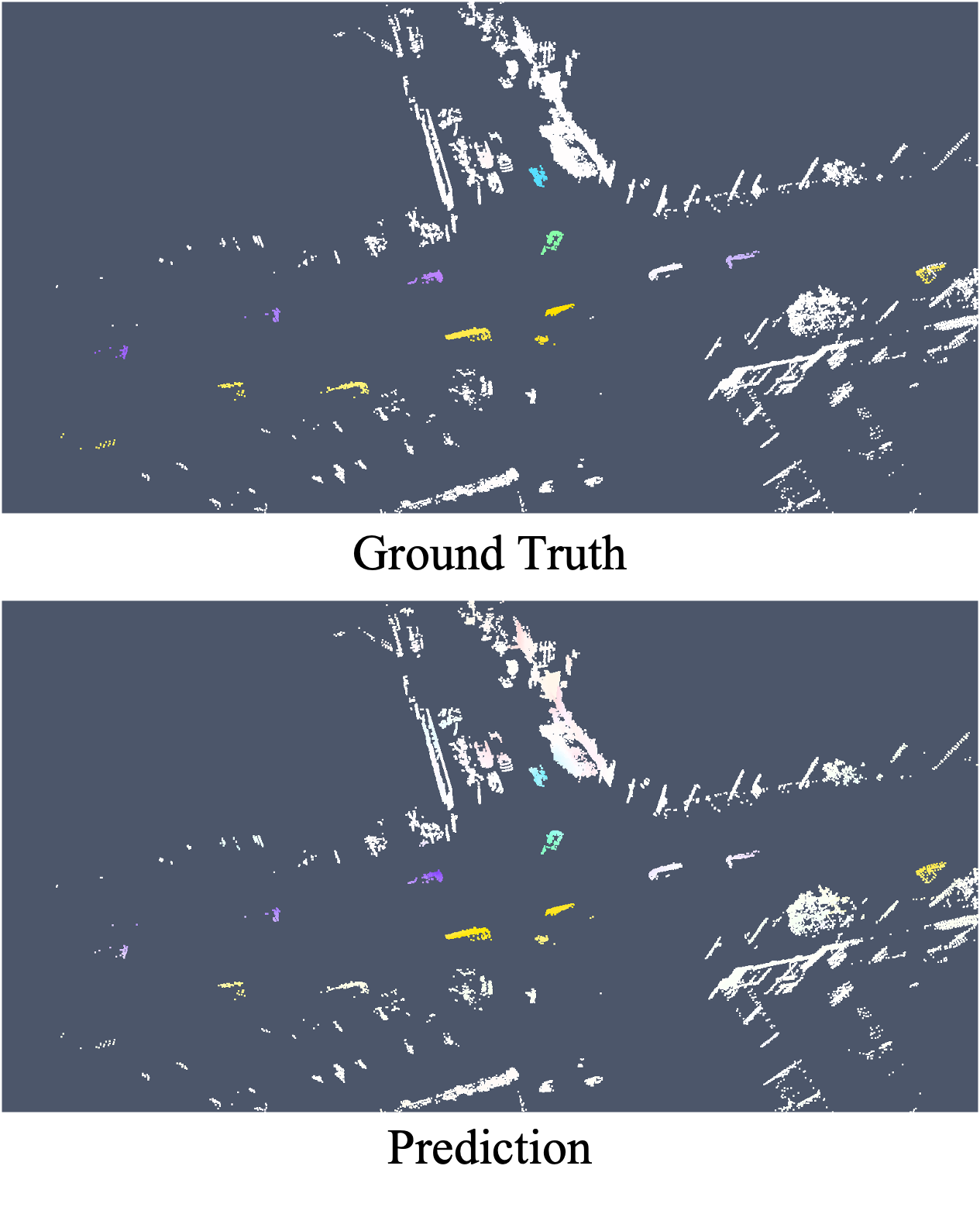

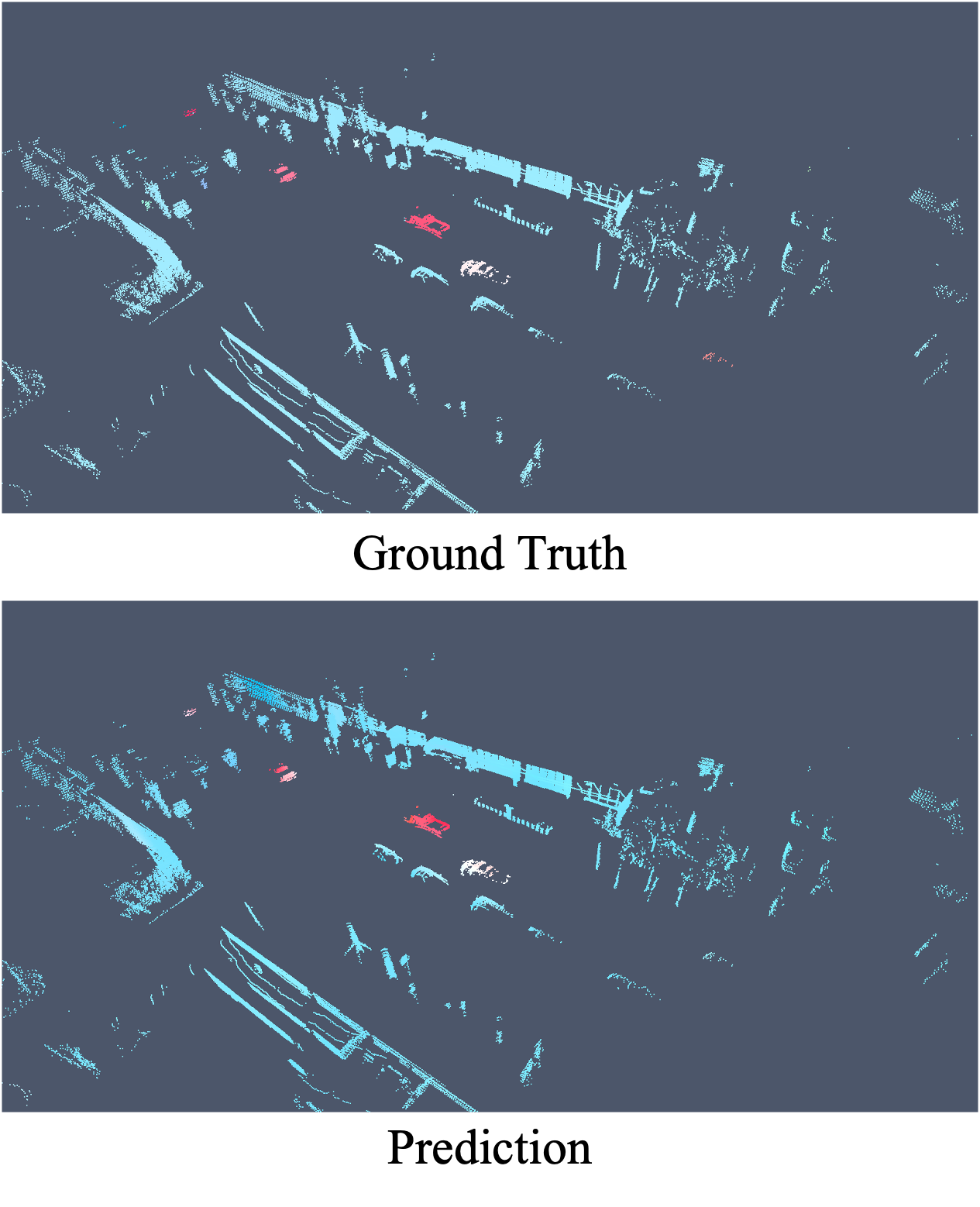

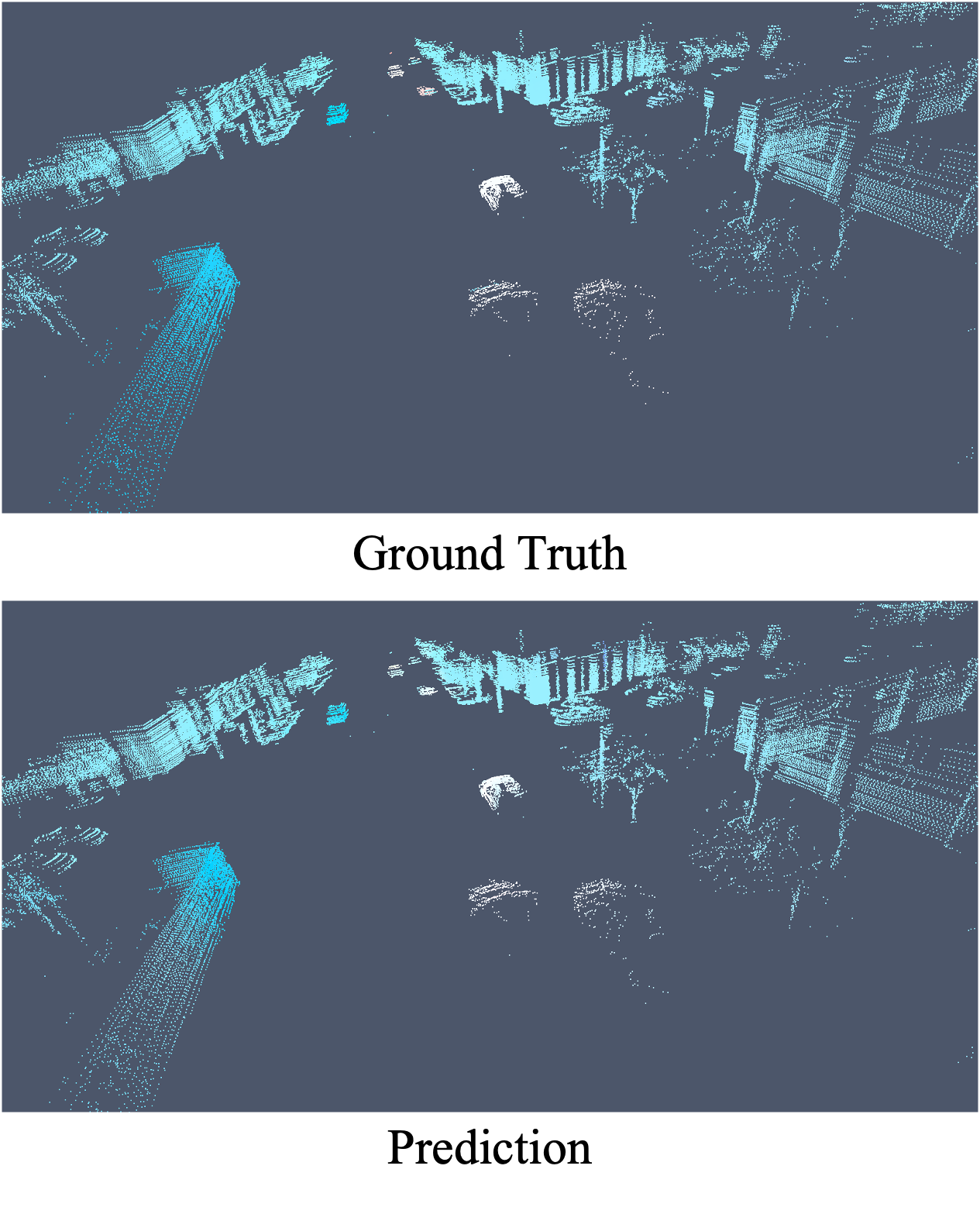

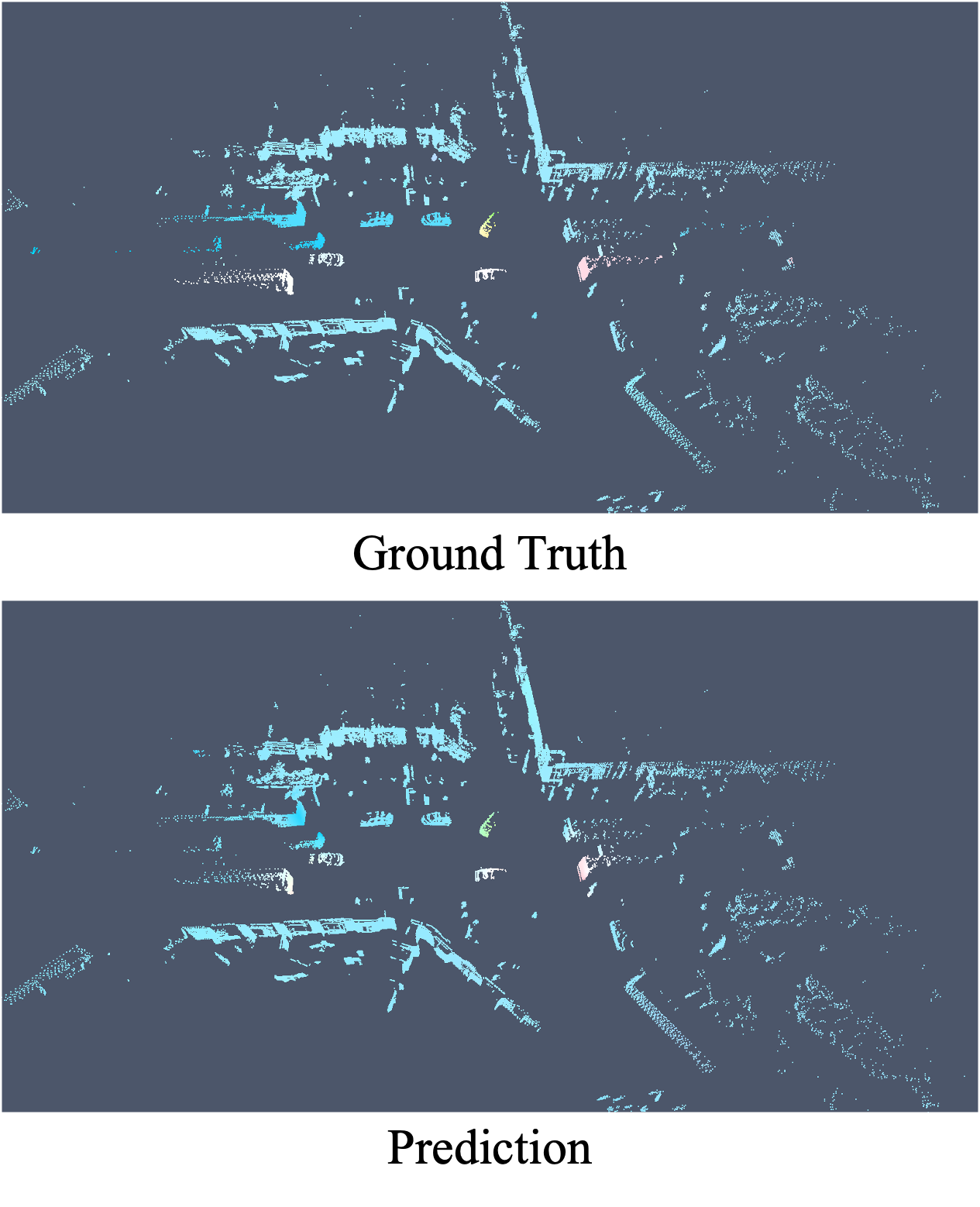

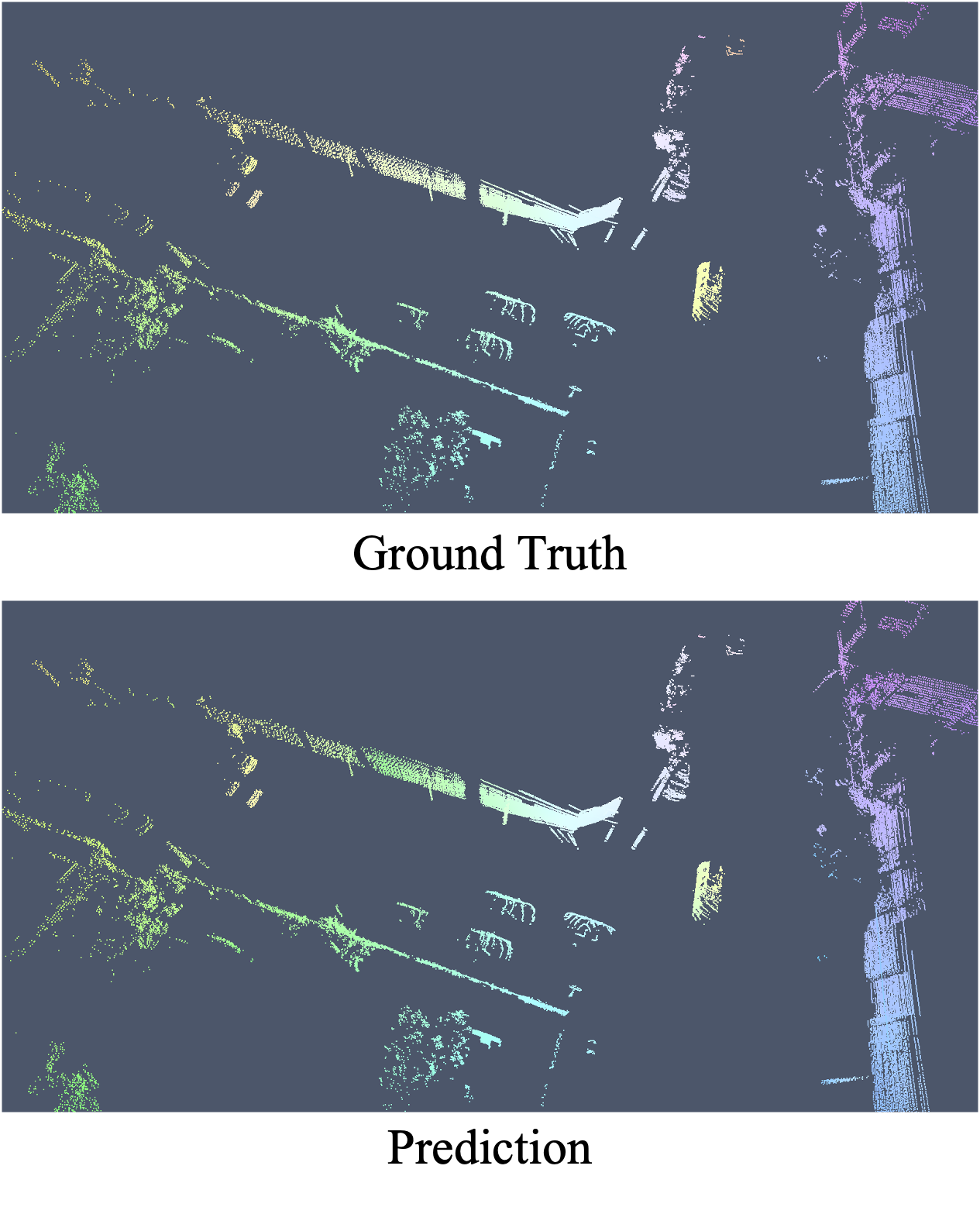

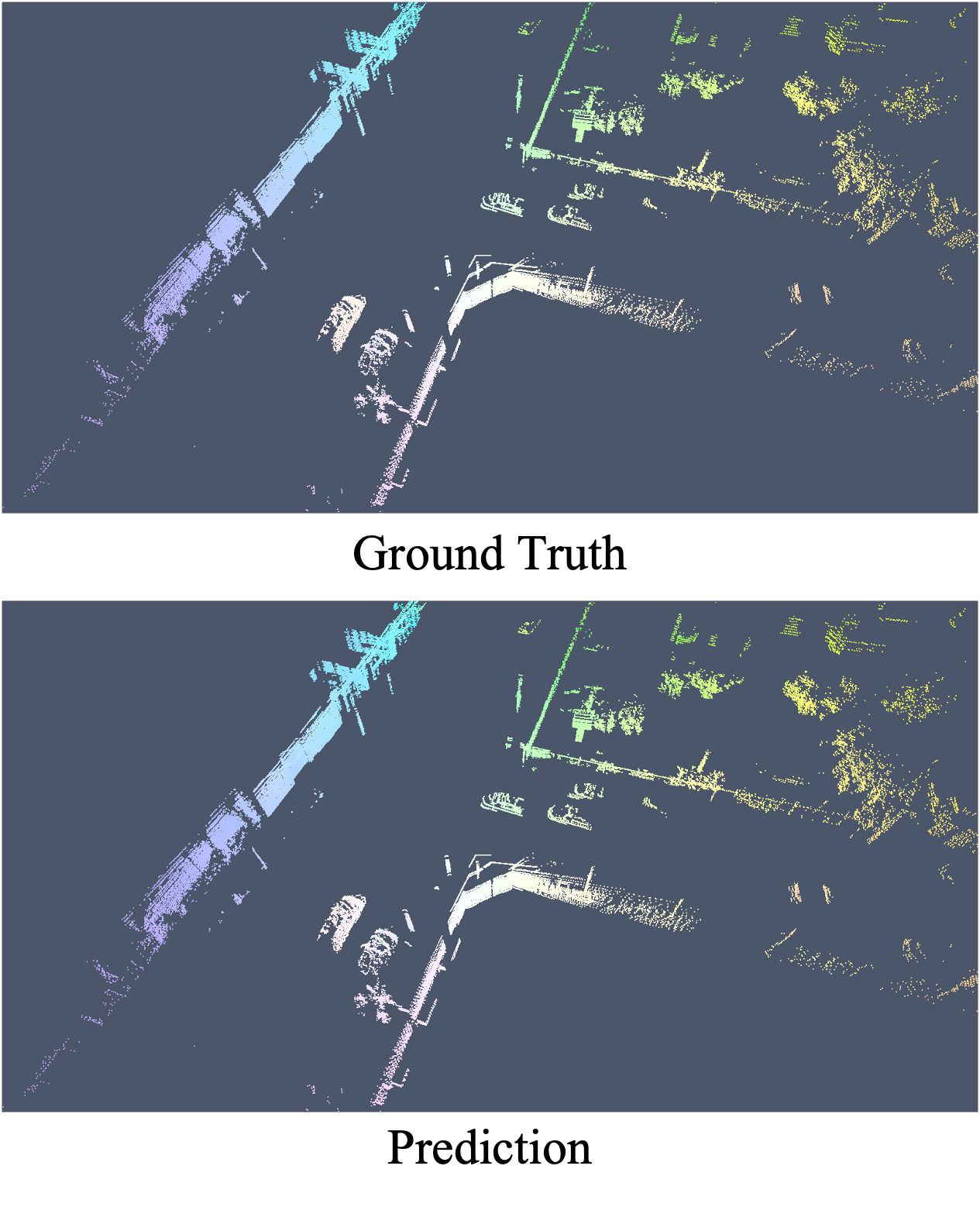

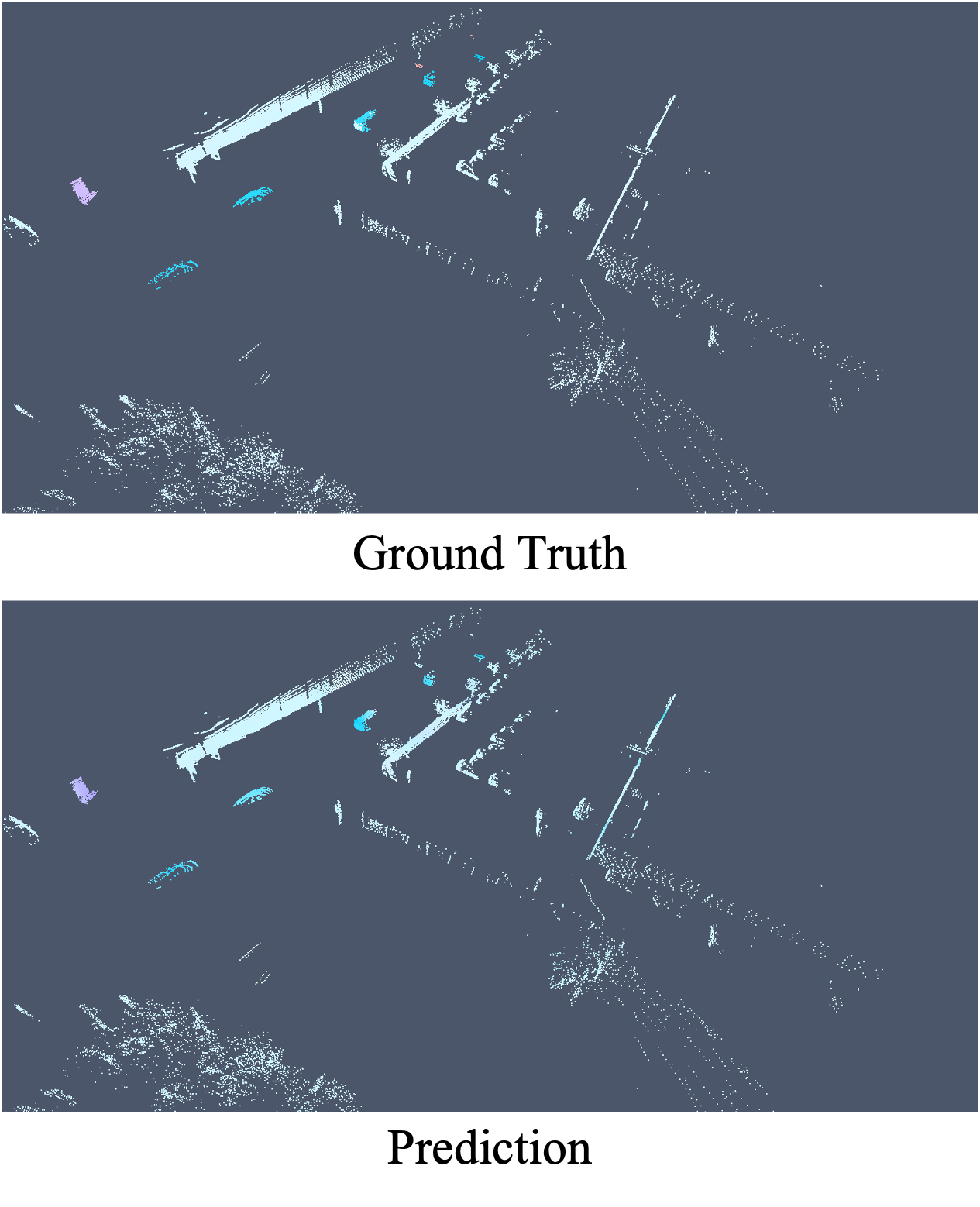

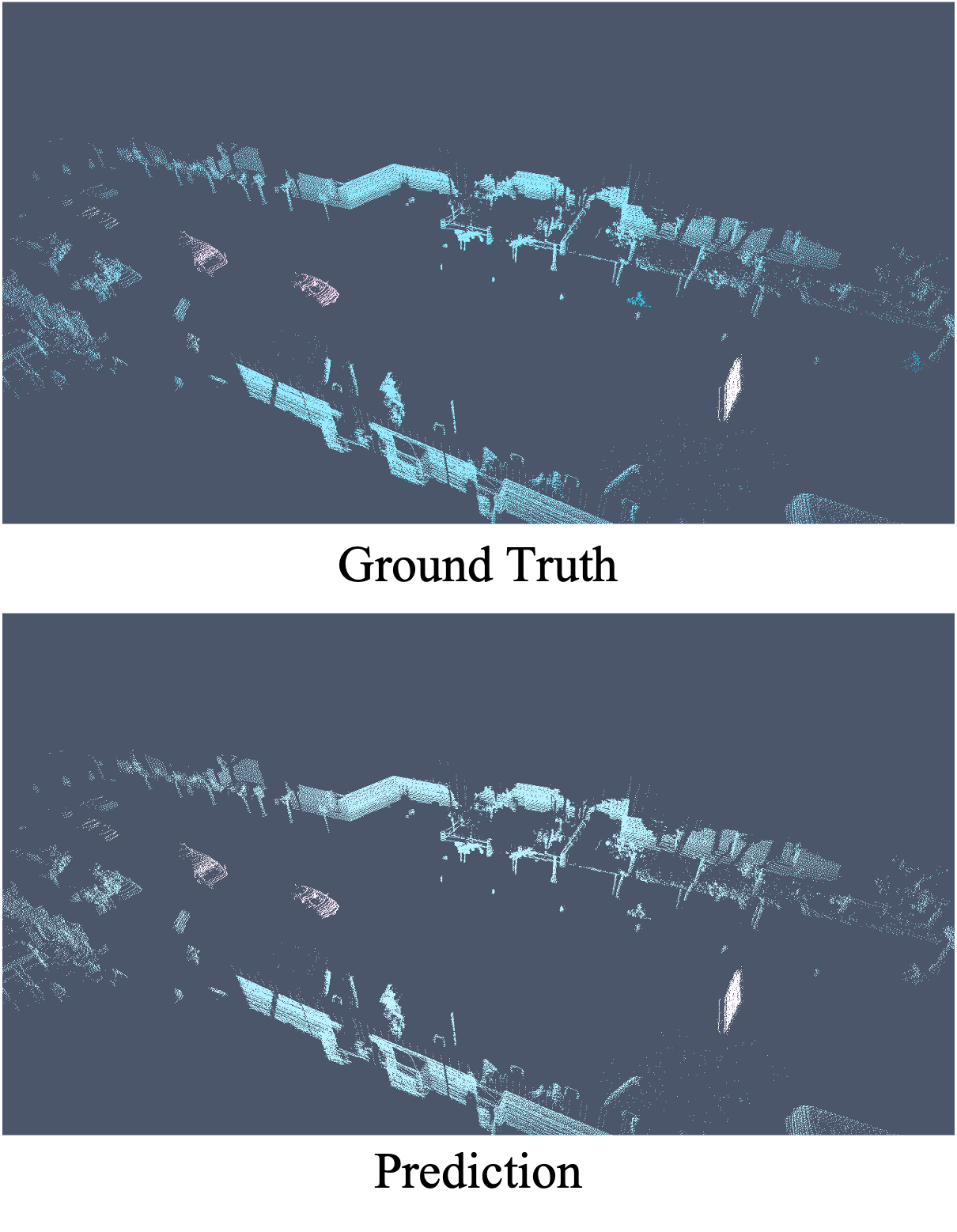

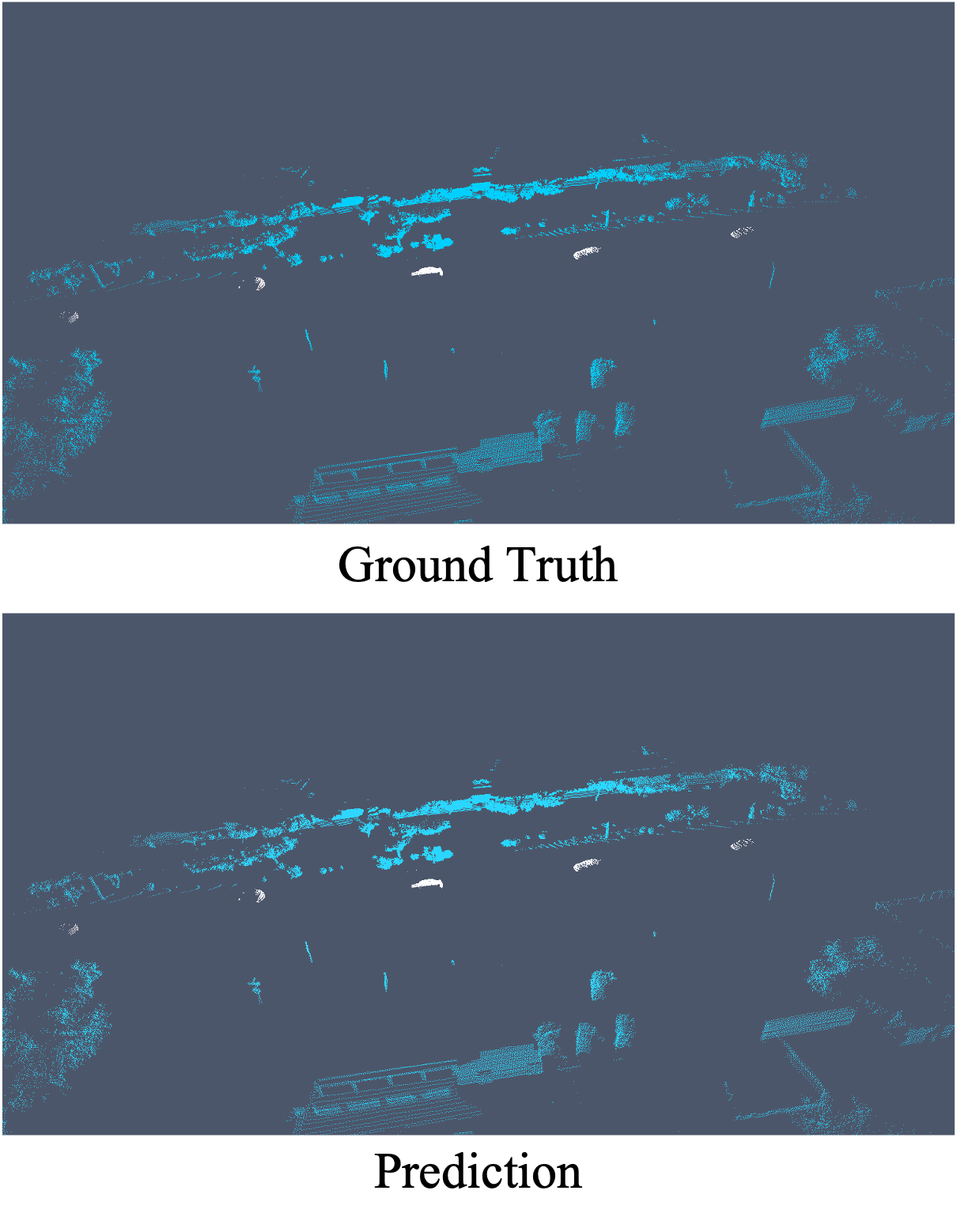

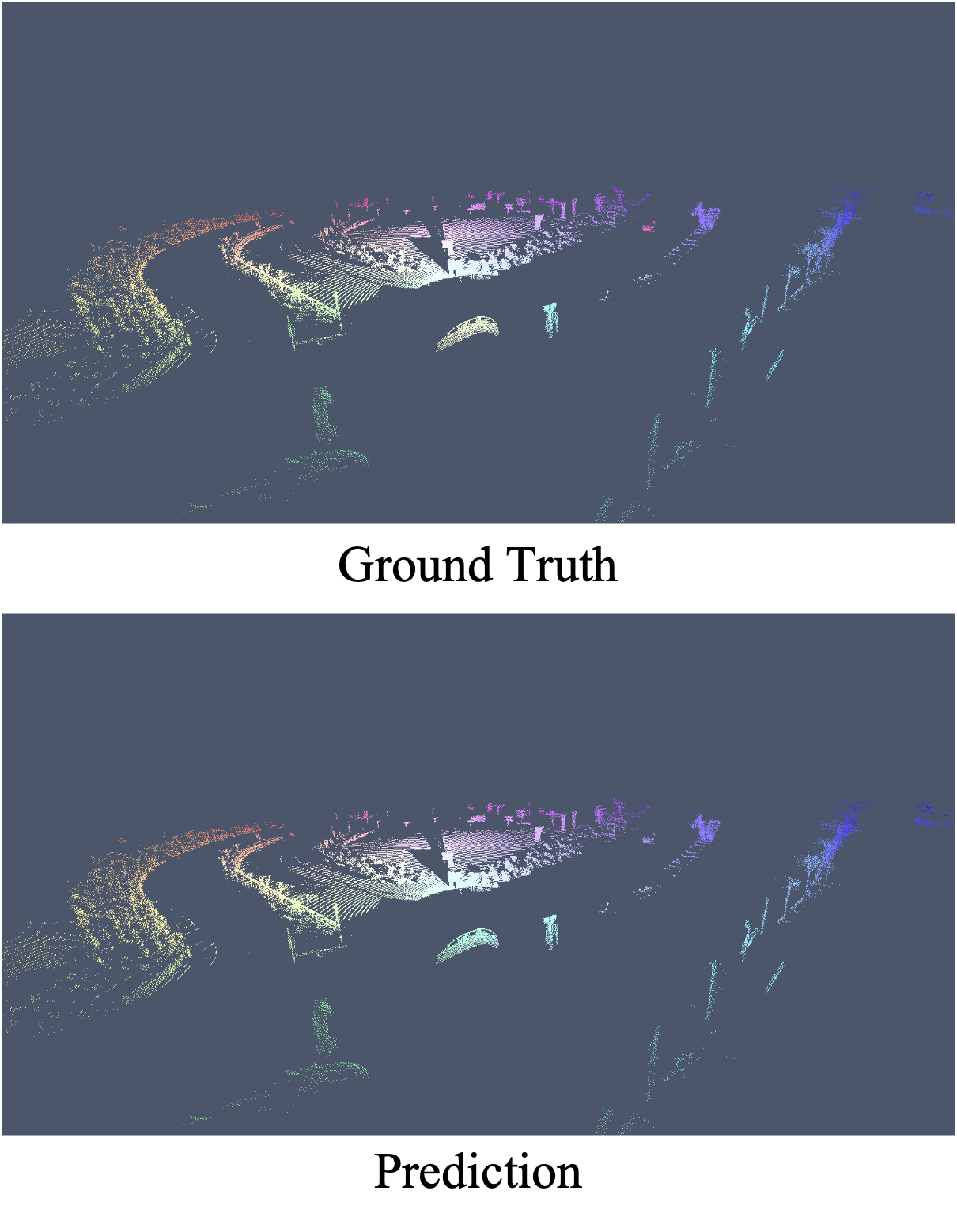

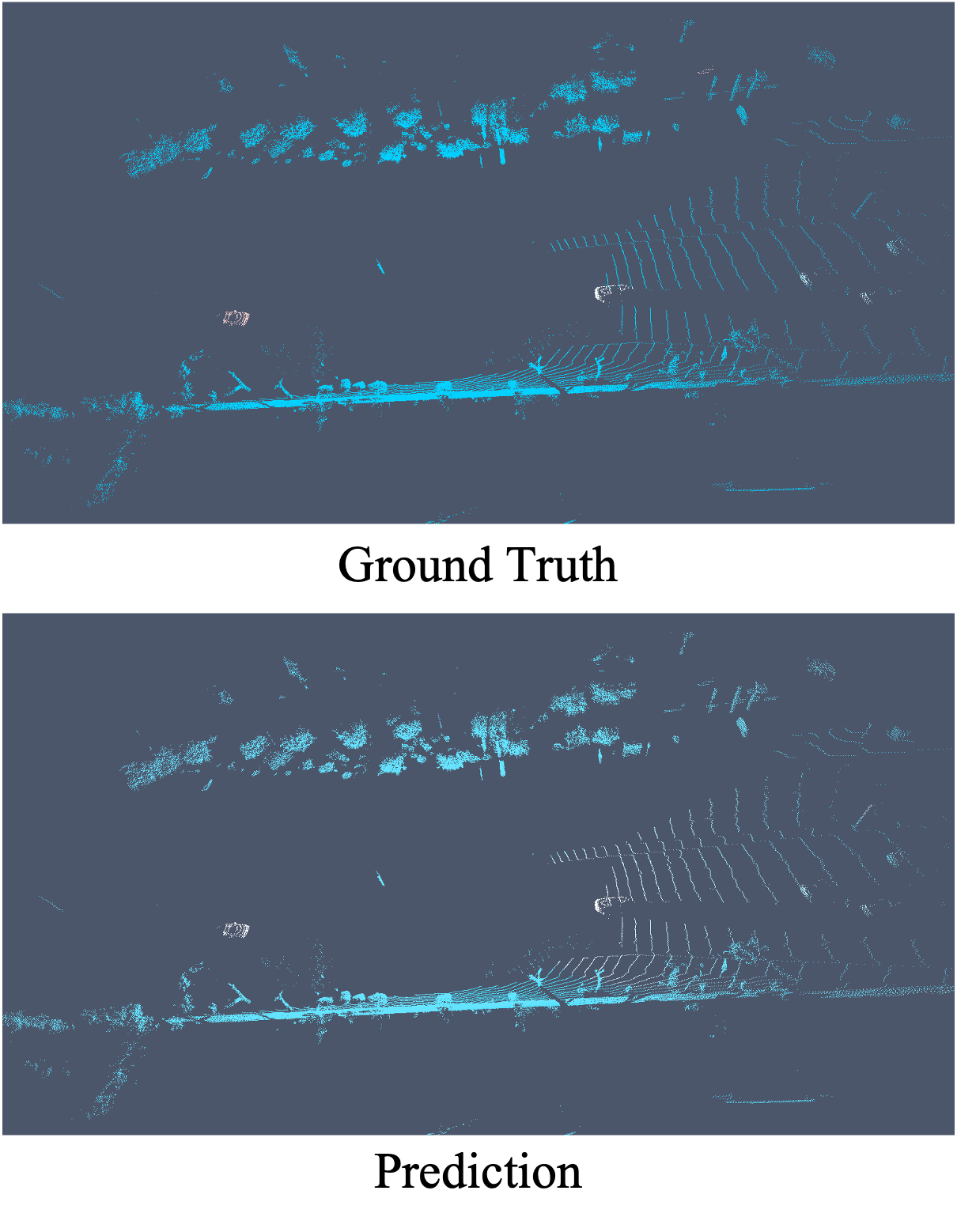

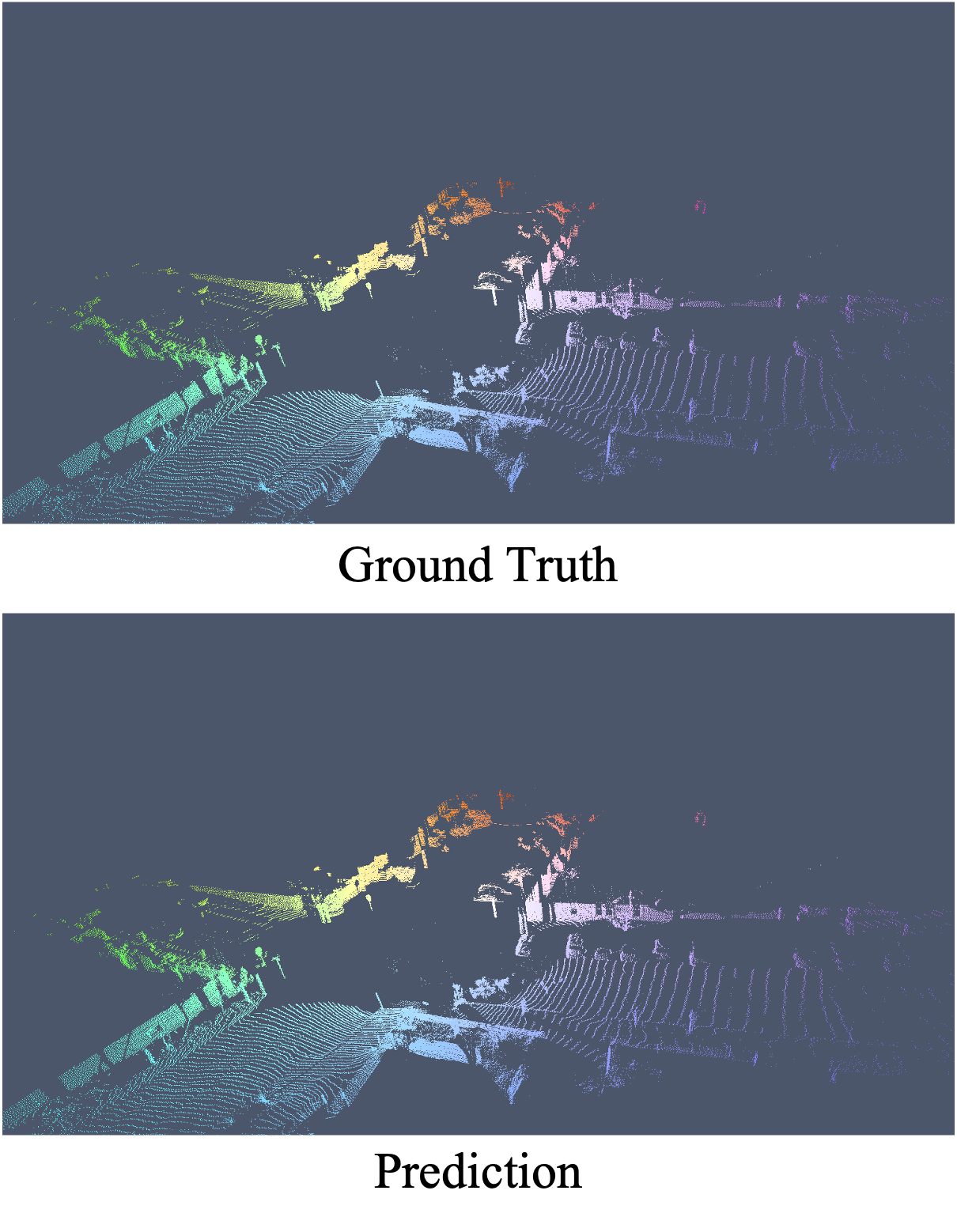

Neural Scene Flow Prior (NSFP) is of significant interest to the vision community due to its inherent robustness to out-of-distribution (OOD) effects and its ability to deal with dense lidar points. The approach utilizes a coordinate neural network to estimate scene flow at runtime, without any training. However, it is up to 100 times slower than current state-of-the-art learning methods. In other applications such as image, video, and radiance function reconstruction innovations in speeding up the runtime performance of coordinate networks have centered upon architectural changes. In this paper, we demonstrate that scene flow is different -- with the dominant computational bottleneck stemming from the loss function itself (i.e., Chamfer distance). Further, we rediscover the distance transform (DT) as an efficient, correspondence-free loss function that dramatically speeds up the runtime optimization. Our fast neural scene flow (FNSF) approach reports for the first time real-time performance comparable to learning methods, without any training or OOD bias on two of the largest open autonomous driving (AV) lidar datasets Waymo Open and Argoverse.

title={Fast Neural Scene Flow},

author={Li, Xueqian and Zheng, Jianqiao and Ferroni, Francesco and Pontes, Jhony Kaesemodel and Lucey, Simon},

booktitle={Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)},

month={October},

year={2023},

pages={9878-9890}

}

This template was inspired by project pages from Chen-Hsuan Lin and Richard Zhang.